Since nVidia announced its DGX GH200 GPU monstrosity a few months ago this summer, it's high time for another huge step forward in the AI space courtesy of the Cerebras Wafer Scale Engine-2.

The new AI supercomputer is from a much less well-known company than nVidia called Cerebras, which has developed a massive new processor dedicated to artificial intelligence and deep learning called the Wafer Scale Engine-2. The rather ordinary-sounding name belies a technological tour de force that even nVidia’s boss Jensen Huang might envy.

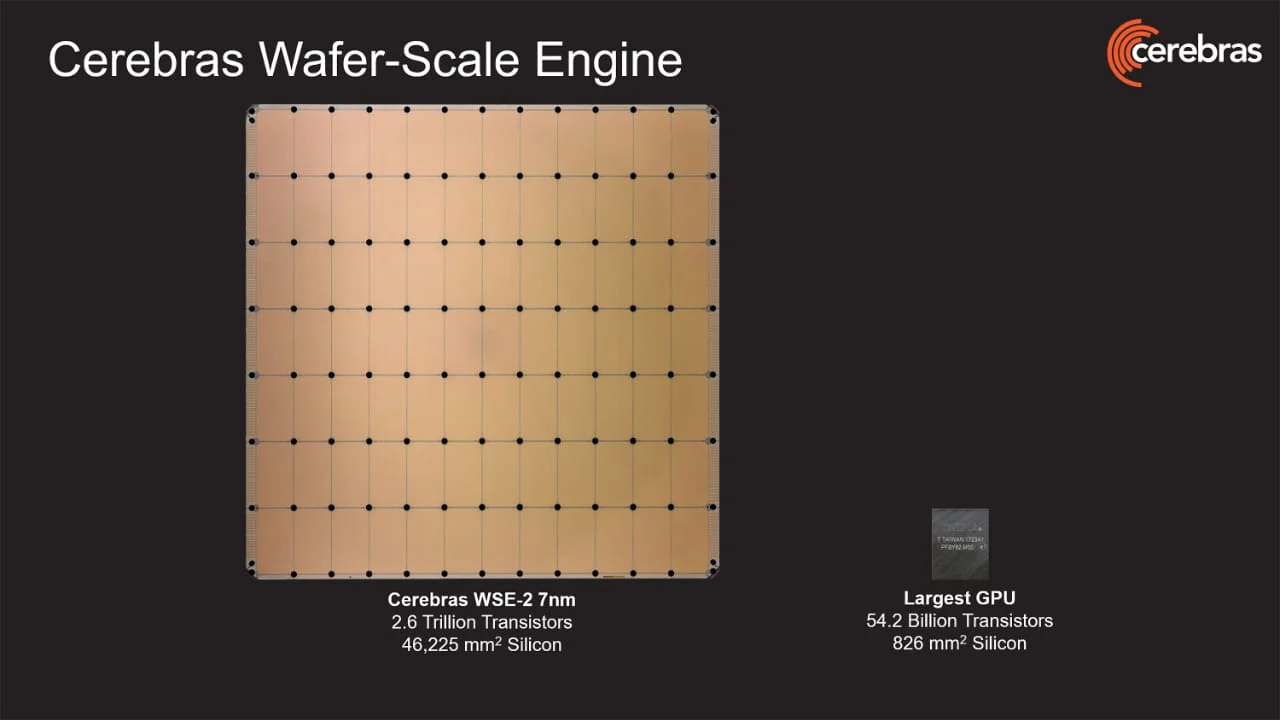

For starters, the reason for the name is that the processor is the size of an entire 12-inch wafer. The second generation WSE uses the TSMC 7nm process, and while nVidia's Hopper has an insane 80 billion transistors, the Cerebras WSE-2 has 2.6 TRILLION, providing 850,000 cores optimized for tensor computing. Each wafer/chip has 40GB of high-speed static RAM spread throughout the chip with a bandwidth of (hold on to your hat) 20 Petabytes per second. The interconnect providing the communications infrastructure to that wafer has (still holding your hat?) 220 Petabytes per second.

And it’s using this to build a supercomputer. Well, nine of them to be exact.

The Condor Galaxy 1 is an AI supercomputer built in partnership with AI service provider G42 and features 64 of these huge chips, for a total of 54 million cores to make a 4 Exaflop monster. When the Condor Galaxy's two siblings, creatively named CG-2 and CG-3 are also complete by early 2024, according to the company, all three will be linked to each other with dedicated fiber optic pipes.

These three AI supercomputers will form a 12 Exaflop, 162 million core distributed AI supercomputer consisting of 192 Cerebras CS-2s and fed by more than 218,000 high-performance AMD EPYC CPU cores.

When complete, the Condor Galaxy will comprise nine of these 64-chip supercomputers, all linked with fiber, for a collective 36 Exaflops of raw power. The first three are being built at Colovore, a high-density, liquid-cooled data center in Santa Clara, California, while the location of the next six has yet to be revealed.

The Cerebras clusters include a suite of technologies designed to make scaling up large AI tasks as easy as possible by providing hardware support for tasks like broadcasting weights on neural network edges and updating their weights.

Cerebras has also put a lot of effort into its software development kit (SDK). Even in a shared memory supercomputer like the nVidia DGX GH200, programmers have to write specialized code to divide their tasks up across processors. While it's far easier on a shared memory supercomputer than on a distributed cluster, the programmer still needs to have some knowledge about how to spread the work out across the compute engines. Cerabras' SDK aims to eliminate that part while also improving scaling.

Cerebras cites an example in which an nVidia DGX-A100 system with eight A100 GPUs completed a machine learning benchmark in 20 minutes, while a cluster of 540 A100 GPUs brought that time down to 13.5 seconds. However, while that is an 89x speed up, it required 540x the computing power; the scaling factor there is pretty poor. One of the factors contributing to this is that the complexity of distributing the workload also increases with the size of the cluster.

(It must be noted that the distribution complexity is lower with a shared memory system than with a cluster, so the new nVidia GH200 will scale better than the GH100 for that reason. Because systems of this scale take quite a while to build out though, even once their manufacturing is complete, there are no GH200 tests to compare with yet.)

In contrast, a machine learning application built on the Cerebras SDK can scale up to consume all available compute nodes with a config file change. The proprietary and machine learning optimized collection of technologies that Cerebras has built also enables much better scaling than usual; Cerebras claims that it can scale almost linearly, meaning that 540 Wafer Scale Engines are 540x faster than one, giving a genuinely 1:1 scaling factor.

The sheer power of even one Wafer Scale Engine-2 is incredible, and if Cerebras can achieve near-linear scaling then the full cluster of interconnected Galaxy clusters will offer the sort of power to challenge imagination when they all go online over the course of the next year or so.

▼▼▼

Read more latest news about the PCB and semiconductor industry here

+86 191 9627 2716

+86 181 7379 0595

8:30 a.m. to 5:30 p.m., Monday to Friday